Accessibility Isn't a Side Quest: It's the Story AI Has Been Waiting to Tell

One year is not a long time. Yet, from GAAD 2024 to GAAD 2025, it feels as if we have crossed an entire era in AI. What once seemed distant, experimental, even niche, has quietly become essential.

The twist: the breakthrough did not come from chasing shiny features, it came from chasing the toughest users. Build for people who are blocked at every turn and the tech levels up for everyone else.

I have spent the past year watching AI trade hype for hard results, the flashy demo for the everyday win. The shift is quiet, precise, and impossible to ignore.

Here is the inside tour of all thats changed in the past year.

1. Text-to-Speech Found Its Voice

In 2024, synthetic speech had reached a technical peak: clear, articulate, but unmistakably robotic. Voices could deliver words, but not feelings. They spoke, but they didn’t speak to you.

That has changed.

Today, open-source projects like Dia by Nari Labs and Seasme are leading the charge in emotional, lifelike speech synthesis. They bring the subtle music of human expression: tone, rhythm, hesitation back into digital conversations.

More importantly, they democratize this technology. By keeping voice AI in public hands, these projects prevent a future where our digital voices are monopolized by a few tech giants.

Voice cloning has also gone mainstream. Platforms like Cartesia and ElevenLabs can now replicate your voice using just a 30-second audio clip, even if its potato quality.

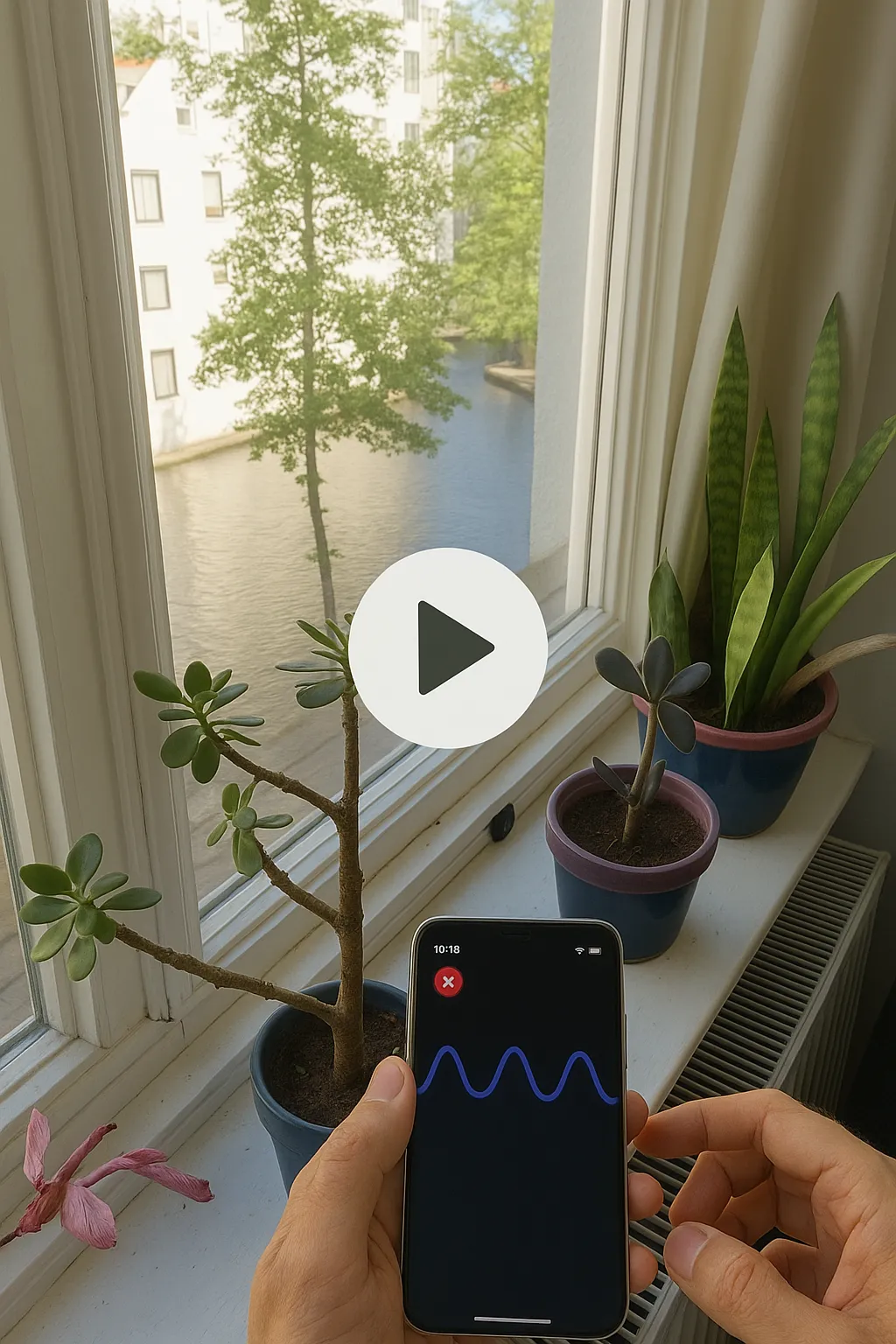

At Envision, we are exploring this frontier through ally. Here's a ally in action.

2. Smart Glasses Became Part of the Everyday

In 2024 smart glasses sat on the cusp of relevance. Twelve months later they have stepped firmly into the mainstream. A consumer can now choose between Ray-Ban Meta, Google’s Project Moohan, Solos, Mentra, and of course Envision, each model tuned for different use cases and budgets.

Wider hardware choice has been matched by an openness of spirit: manufacturers are releasing SDKs, courting independent developers, and inviting third-party apps that push accessibility forward.

At Envision, our collaboration with Meta’s Project Aria has shown how powerful this new ecosystem can be. By blending computer vision with conversational AI, we’ve created glasses that help blind and low-vision users read signs, recognise products, and navigate public spaces with confidence.

3. AI Agents Grew From Assistants to Capable Collaborators

In 2024 an agent would answer a question, then wait.

By GAAD 2025 it has started moving first, breaking problems into steps, guessing your next need, and wrapping up the job before you notice.

At Envision our form-filling agent already turns a wall of unlabeled fields into a finished PDF for blind and low-vision users. Manus AI shows the same momentum with agents that dig for information, draft reports, and trigger follow-ups without a human prompt.

Why does that matter? Because the interface, often the very thing that blocks people, quietly disappears.

A glimpse of what could come in the next year:

- A blind customer opens her banking app. Before the home screen finishes loading, the agent fetches the new compliance form, pre-fills every field, and waits for a single voice “yes” to send it.

- A wheelchair user says “Dentist at nine.” Overnight the agent books an accessible ride, locks in a step-free rail route, streams turn-by-turn audio to smart glasses, and alerts the clinic if an elevator outage shifts the arrival time.

- A Deaf entrepreneur joins a video call. The agent paints a sign-language avatar on-screen, drops crisp captions into the transcript, and builds a “things they said while you typed” list for later review.

No special versions, no extra clicks. Just technology that works because it was designed for everyone.

Accessibility is Not Just Inclusion: It's Accelerating AI Innovation

Rewind to GAAD 2024, hit play, and the difference pops. Accessibility isn’t a side-quest about inclusion anymore; it is rocket fuel for AI itself.

Build for the toughest edge cases and everyone else gets an upgrade for free. That is why the tech is growing not just smarter but unmistakably more human. Agents are now syncing with smart glasses, watches, and whatever comes next. Soon they won’t merely assist; they will flat-out empower.

A future where independence and agency are not luxuries, but defaults.

Let’s keep moving in that direction.

This article was originally published on LinkedIn on Global Acessibility Awareness Day -May15, 2025

%201.svg)